Facebook Reports Gains in Removing Objectionable Content

13 Noviembre 2019 - 6:24PM

Noticias Dow Jones

By Jeff Horwitz

Facebook Inc. reported gains in detecting hate speech,

child-abuse imagery and terrorist propaganda on its platforms,

arguing that its willingness to publish statistics on the removal

of objectionable content shows its commitment to transparency.

"The systems we've built for addressing these issues are more

advanced than what any other company has," Chief Executive Mark

Zuckerberg said Wednesday, adding that other internet companies

have avoided making similar disclosures because "they don't want to

admit they have a problem too."

The Facebook co-founder was unusually blunt in his criticism of

other internet and social-media platforms on a call with reporters,

but he didn't specifically name any competitors nor say where their

detection efforts and disclosure fall short.

Facebook, Alphabet Inc.'s Google and Twitter Inc. are among

large social-media companies that release reports aimed at giving

the public a window into their businesses. These firms have

responded to persistent complaints about illegal and offensive

content on their platforms by providing metrics to judge their

progress in removing it, sometimes before it gets flagged by

users.

Facebook's enforcement report shows the volume of problematic

content it hosts remains staggering, with more than 12 million

pieces of child nudity or sexual-abuse content alone being removed

from the main Facebook platform and Instagram during the September

quarter. But the company said the actual frequency with which such

material is viewed is so small that it can't be reliably measured,

with such posts accounting for less than 0.04% of what users

actually saw.

The report marked the first time the company reported

content-enforcement statistics for Instagram, which is

significantly less successful than the Facebook platform at

detecting terrorist propaganda, child exploitation and self-harm

content before it is reported by users.

On Facebook, all but 3% of suicide and self-harm content is

removed from the platform without reports from users, the company

said. Instagram users are still responsible for flagging 21% of

similar content that is eventually removed.

Vishal Shah, Instagram's head of product, said the disparity

partially reflects Facebook having more mature artificial

intelligence systems, and he pledged Instagram would make gains

over time.

Facebook also touted its progress this year in removing posts

that violate its policy barring illicit firearm and drug sales. It

said about 6.7 million pieces of such content was removed from

Facebook in the most recent quarter, up from nearly 1.5 million in

the first quarter.

Facebook has faced criticism over its Marketplace operations in

connection with gun sellers being able to dodge prohibitions

designed to end such activity.

U.S. Attorney General William Barr and international officials

in recent months have criticized Facebook's plan to roll out

encrypted messaging across its services, a step that would make it

harder for either the company or law enforcement to monitor for

illegal activities.

Mr. Zuckerberg acknowledged "real tension" between encryption

and safety. At the same time, he said Facebook should be credited

for helping make society safer by doing a better job of policing

the company's platforms.

Write to Jeff Horwitz at Jeff.Horwitz@wsj.com

(END) Dow Jones Newswires

November 13, 2019 19:09 ET (00:09 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

Alphabet (NASDAQ:GOOG)

Gráfica de Acción Histórica

De Mar 2024 a Abr 2024

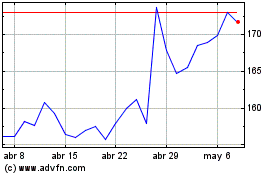

Alphabet (NASDAQ:GOOG)

Gráfica de Acción Histórica

De Abr 2023 a Abr 2024