Facebook to Ban QAnon Groups and Pages -- Update

06 Octubre 2020 - 7:20PM

Noticias Dow Jones

By Sarah E. Needleman

Facebook Inc. said it would step up its crackdown on QAnon,

removing more groups and pages devoted to the fast-growing

conspiracy movement that has thrived on social media.

The move, announced on Tuesday, builds on Facebook's efforts

announced in August to remove QAnon pages and groups that included

discussions of potential violence. The company will now ban any

pages or groups dedicated to QAnon across Facebook, as well as

Instagram accounts focused on QAnon content. The new policy doesn't

ban individuals from posting about the movement.

The company said the new policy was based in part on an

increased understanding of how QAnon messaging is evolving. "We aim

to combat this more effectively with this update that strengthens

and expands our enforcement against the conspiracy theory

movement," the company said in a statement. Facebook also said it

expects renewed attempts to evade detection and that it could

update its content policies as needed.

The QAnon conspiracy theory centers on the idea that a powerful

group of child traffickers control the world and are undermining

President Trump with the help of other elites and mainstream news

outlets. Last year a Federal Bureau of Investigation field office

warned that QAnon and other conspiracies could spark violence in

the U.S., and QAnon adherents have discussed future plans to round

up or kill members of the supposedly evil cabal.

President Trump in August welcomed the support of QAnon

followers and said while he knew little about the movement, he

suggested those who subscribe to it are "people who love our

country."

Social-media companies have received mixed reactions to their

policies around rule violators, with some arguing that the

companies are stifling free speech and others wanting them to take

a tougher stance.

Microsoft Corp.-owned LinkedIn has recently taken steps to

remove QAnon posts with misleading information in response to more

supporters going public on the career-networking platform. Twitter

Inc. has also pledged to increase enforcement against QAnon

conspiracy followers.

Policing QAnon content is just one of the broad

content-moderation issues that the world's largest social-media

companies are facing. Platforms have been grappling with the spread

of misinformation related to the coronavirus pandemic as well as

groups connected to the boogaloo movement. Its adherents views' are

wide-ranging, with a focus on overturning authority, according to

researchers who track extremist organizations.

Facebook and Twitter both moved Tuesday to place limits on posts

by President Trump in which he claimed the coronavirus isn't as

deadly as the common flu. The statement is widely considered false

by medical professionals. Facebook removed Mr. Trump's comment,

while Twitter appended a notice to his tweet explaining that it

violated its rules on spreading harmful information related to the

virus. Twitter said it didn't remove the tweet because it "may be

in the public's interest" to remain accessible.

A report last month from research firm Graphika Inc. draws a

connection between QAnon's online activities and those who strive

to play down the importance of health matters such as

vaccinations.

"The QAnon worldview has acted as a catalyst for the convergence

of online networked conspiracy communities, anti-[vaccination] and

anti-tech alike," Graphika said in its report. "In our Covid-19

maps, the core QAnon community and the Trump support group were

both deeply interconnected on a network level and mutually

amplifying each other's content and narratives."

--Jeff Horwitz contribute to this article.

Write to Sarah E. Needleman at sarah.needleman@wsj.com

(END) Dow Jones Newswires

October 06, 2020 20:05 ET (00:05 GMT)

Copyright (c) 2020 Dow Jones & Company, Inc.

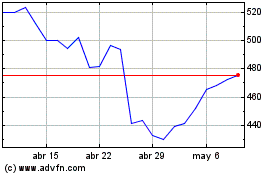

Meta Platforms (NASDAQ:META)

Gráfica de Acción Histórica

De Mar 2024 a Abr 2024

Meta Platforms (NASDAQ:META)

Gráfica de Acción Histórica

De Abr 2023 a Abr 2024